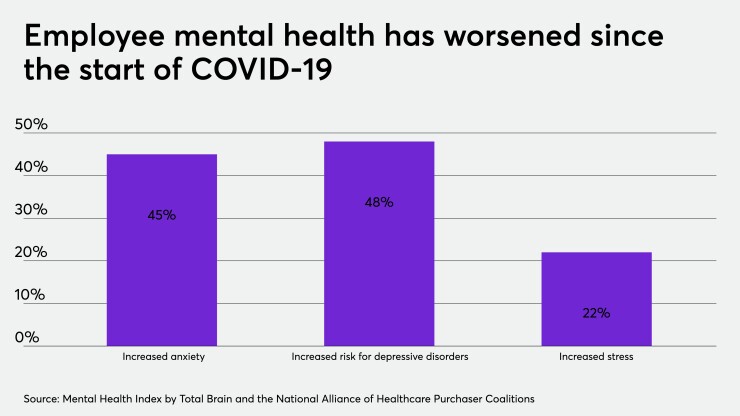

Interest in mental health benefits increased dramatically in 2020, for obvious reasons — mental health problems spiked worldwide as people struggled with isolation, fear and maybe even the illness or death of family and friends.

Dozens of startups emerged to fill the urgent need for

Read more:

In my discussions with HR executives, I found it was because they didn’t know what questions to ask. I’ve spent my entire career working in clinical research. Here’s my advice on what HR pros should ask for when evaluating mental health benefits.

- Peer-reviewed research supporting the actual product. Research supporting the broad type of intervention is not enough — it must support the actual product or specific service someone is trying to sell you, and it should be published in a recognized peer-reviewed journal. Published research goes through a rigorous screening process, ensuring that the analyses are accurate and conclusions are fair. Any solution without peer-reviewed research may not be scientifically sound — this should be your very first question as a No could be a deal breaker.

- Whether the company has conducted randomized control trials. This is the gold standard for trial type — when you evaluate your solution against something else, called a comparator (e.g., waitlist, treatment as usual, another solution). Consider depression — symptoms tend to wax and wane, and some people will get better over time without any intervention. A randomized control trial can measure if a person’s recovery was due to your solution or some other factor like time.

- Whether the solution has been prospectively evaluated. This means that baseline measurements were taken before a study began. I’m aware of at least one

online mental health program that asked participants at the end of the study how they felt, and then asked how that compared to how they felt at the start of the program. This is called retroactive evaluation, and in scientific circles it’s a big red flag. Prospective evaluation, where participants are assessed at the start of a study, is the scientifically sound method. - Whether the research explored long-term results. Solutions that have been in the market for at least a year should have some peer-reviewed research on long-term results — how program participants fared 3 months, 6 months and ideally 12 months post program. Were they able to maintain the results achieved during the program? Long-term outcomes are crucial.

- How the company accounts for missing data in its research studies. There are always people who drop out of research studies for one reason or another — in other words, the number of people who enroll is never the same as the number of people who complete the assessment at the end. Data from the people who dropped out is known as missing data. Study architects should notate any missing data and share completion rates. If 100 people started the study and 85 completed it, results should be reported for all 100 participants, not just the 85 who completed because completers typically have better outcomes than people who drop out. The analyses should include the full sample to get the real story of how enrollees fared.

- How transparent is the vendor about research? I’d be wary of any company that would not willingly share research. Companies that follow Open Science principles are ideal. These companies pre-register their papers/studies, so they can’t just publish the ones with positive results. They also typically share their statistical code — and while that code may not mean much to a HR pro, the fact that they are willing to share it speaks volumes — they have nothing to hide. Also ask if they can share a preprint of any peer-reviewed data. A preprint is the full, original report of a study’s findings — the draft that originally gets submitted to the journal. Asking for preprints ensures that the HR pro has all of the data analyzed since journals sometimes are more willing to publish exciting findings where significant differences are detected than if the analyses found no differences over time or between groups (called publication bias).

If you ask these questions and vendors seem uncomfortable or avoid sending a response, it should be a red flag prompting you to look at other alternatives. A lack of transparency could mean less than solid research practices and reason to doubt the effectiveness of their product. On the heels of a very challenging past few years, few would disagree that patients deserve the best mental health care out there.