- Key Insight: Discover how routine employee AI queries create critical enterprise data-exposure blind spots.

- What's at Stake: Regulatory penalties, costly breaches and reputational damage for firms across industries.

- Forward Look: Prepare for AI governance mandates, revised data policies and mandatory onboarding training.

- Source: Bullets generated by AI with editorial review

It only takes one question typed into ChatGPT about health plans, company policies or workplace documents to put sensitive data at risk.

Over one in four professionals have

"The speed of AI adoption has wildly outpaced policy and training," says Malte Schiebelmann, SVP of product at Smallpdf. "The reality is that many employees are unknowingly jeopardizing company security in the name of efficiency."

Read more:

Thirty-eight percent of employees are

A

"The biggest mistake many leaders are making right now is assuming that employees

know better when they often don't," Schiebelmann says. "That disconnect creates a perfect storm where workers feel empowered to use AI, but aren't equipped to protect sensitive employee data."

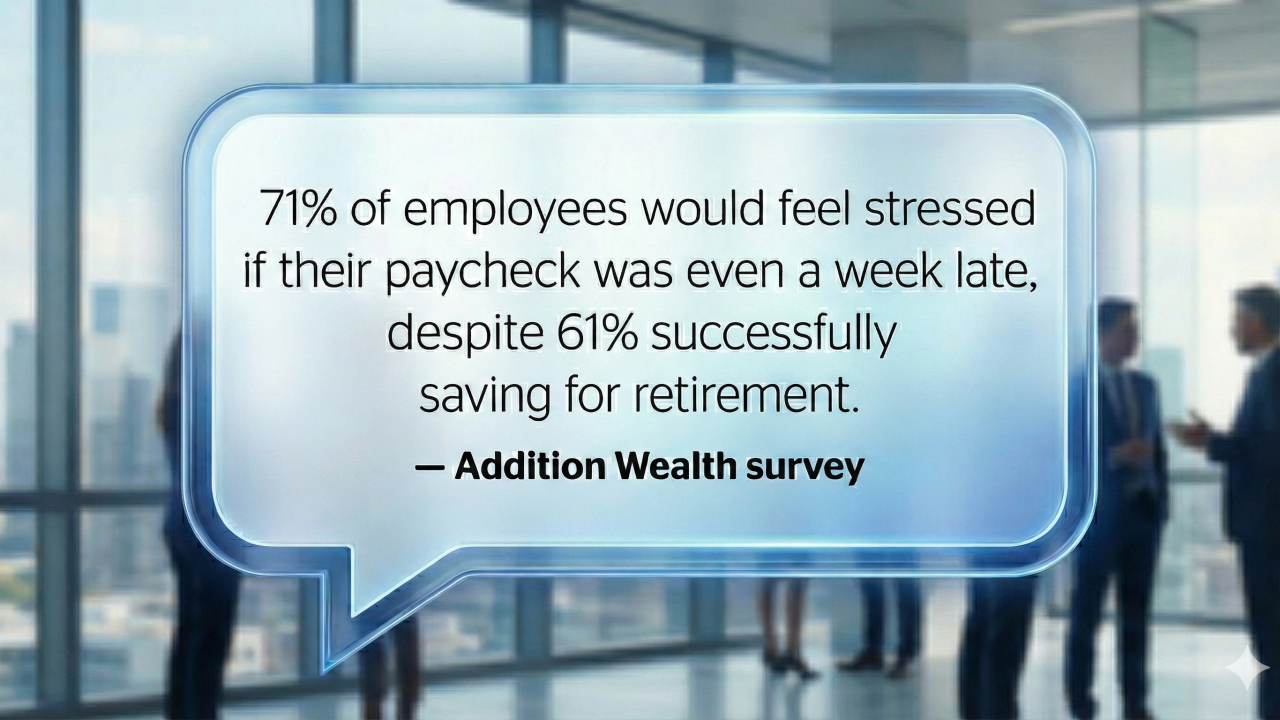

The price of ignorance is too high

While the exact cost of entering workplace information into AI tools

Read more:

"This [should be] a growing concern for teams," Schiebelmann says. "When employees input sensitive information into AI tools, that data can be stored, exposed, or used to train future outside models."

In order to set up the right checks and balances and protect organizations long-term, Schiebelmann advises leaders to embed AI literacy directly into the onboarding process, rewrite existing data policies to add AI regulation and limitations, and offer ongoing employee training and resources that focus explicitly on real-world scenarios they may be seeing every day.

"It's critical that leaders stop treating AI use [and misuse] as an IT-only concern when it's just as much a culture issue," Schiebelmann says. "Leaders must prioritize guardrails that protect both employees and the company from these escalating risks in the AI era."